Jillian was honored at the Texas A&M University’s Graduate and Professional School’s 2025 Spring Awards Ceremony with the Outstanding Mentoring Award for Graduate and Professional Students. This award recognizes and honors individuals who have demonstrated exceptional commitment to mentoring undergraduate students or peers. Jill is passionate about mentoring students through her lab and organizations like Sigma Gamma Tau and the aerospace honor society, helping women navigate school and life stressors while pursuing aerospace engineering opportunities. Congratulations Jill!

Jillian was honored at the Texas A&M University’s Graduate and Professional School’s 2025 Spring Awards Ceremony with the Outstanding Mentoring Award for Graduate and Professional Students. This award recognizes and honors individuals who have demonstrated exceptional commitment to mentoring undergraduate students or peers. Jill is passionate about mentoring students through her lab and organizations like Sigma Gamma Tau and the aerospace honor society, helping women navigate school and life stressors while pursuing aerospace engineering opportunities. Congratulations Jill!

Two New Graduate Students Join VSCL in Fall 2025

VSCL is proud to welcome two new graduate research assistants:

Sadie Binz is a recent graduate of Texas A&M University with a Bachelor of Science in Aerospace Engineering. During her undergraduate studies, she was a member of Sigma Gamma Tau, the Aerospace Engineering Honor Society, and the SAE Aero Design Team, as well as an intern at Bell Helicopter. From this experience, Sadie developed an interest in flight testing and hopes to obtain her private pilot’s license in the future. She has undergraduate research experience in VSCL, primarily in system identification, and is excited to continue researching in VSCL as a graduate student pursuing a A Master of Science degree.

Raul Santos graduated from Texas A&M University in Spring 2025 with a Bachelor’s in Aerospace Engineering with minors in Computer Science and Mathematics. As an undergraduate, he co-founded the Society of Sonic Flight Engineers and began working with the VSCL as an undergraduate assistant. Raul has interned at Albers Aerospace with their Digital Engineering team as a Systems Engineering Intern, and at the Air Force Research Laboratory as a Safe Autonomy Intern with their Autonomy Capability Team. These experiences have motivated Raul to continue pursuing his interests in flight testing and aerospace autonomy as a graduate assistant researcher at the VSCL, beginning Fall 2025.

Raul Santos graduated from Texas A&M University in Spring 2025 with a Bachelor’s in Aerospace Engineering with minors in Computer Science and Mathematics. As an undergraduate, he co-founded the Society of Sonic Flight Engineers and began working with the VSCL as an undergraduate assistant. Raul has interned at Albers Aerospace with their Digital Engineering team as a Systems Engineering Intern, and at the Air Force Research Laboratory as a Safe Autonomy Intern with their Autonomy Capability Team. These experiences have motivated Raul to continue pursuing his interests in flight testing and aerospace autonomy as a graduate assistant researcher at the VSCL, beginning Fall 2025.

VSCL Students Graduate with Bachelor of Science Degrees

Congratulations to the VSCL undergraduate research assistants who graduated with a Bachelor of Science in Aerospace Engineering from Texas A&M University on May 9th 2025!

- Sadie Binz

- Isa Martinez

- Jenna Woodard

- Raul Santos

Graduate Student Evelyn Madewell awarded National Science Foundation Graduate Research Fellowship

Evelyn Madewell, a graduate student in the Department of Aerospace Engineering at Texas A&M University, has received the prestigious 2025 National Science Foundation Graduate Research Fellowships Program (NSFGRFP).

Evelyn Madewell, a graduate student in the Department of Aerospace Engineering at Texas A&M University, has received the prestigious 2025 National Science Foundation Graduate Research Fellowships Program (NSFGRFP).

Madewell, a Ph.D. student, graduated from the University of Washington with a major in Aeronautical and Astronautical Engineering with an Applied Mathematics minor. At UW, her capstone project was awarded the Capstone Technical Excellence and went on to compete as the first US team in the European Air Cargo Challenge with their designed aircraft. Madewell was also recognized as one of the “Trailblazing Women of Aerospace” in the UW Aero & Astro Highflight newspaper.

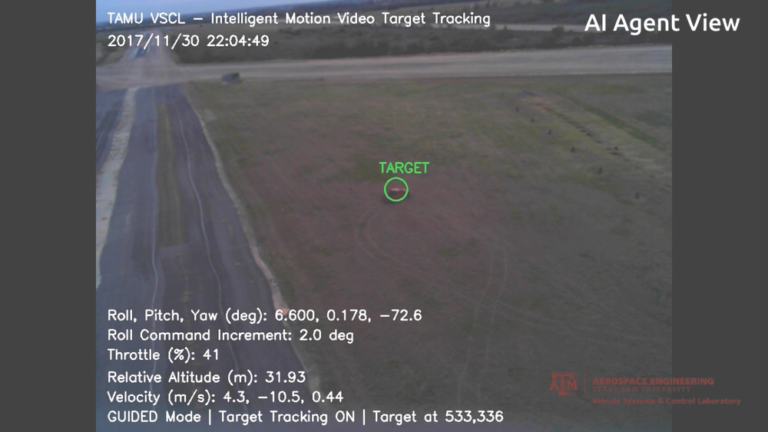

Madewell has interned with Freefly Systems as a flight test and software engineer where she flew many of their industrial drones, executing test plans, retrofitting with various updates, and creating production scripts. Following this she interned with Hood Technology, where she engaged in a literature review on vision-based UAV navigation while still in her last quarter of undergrad. During the summer, she developed a tracking display for in-flight UAV docking from radar test data as well as drafted and iterated on skyhooking models with prototype testing. This upcoming summer she will be contributing to the Stratolaunch Talon A system as a guidance, navigation, and control intern.

Her NSF proposal discussed how despite the growing applications and technologies available for aerial navigation, current navigation systems for Unmanned Aerial Vehicles (UAVs) typically rely on a pairing of GNSS location information, which can be unreliable due to obstacles or simply limited in remote environments, and IMU measurement data, which can have compounded position error. Madewell will work with Professor Valasek to develop a novel, visual-based navigation system that precisely estimates aerial vehicle pose and location in GPS-denied scenarios, enabling UAVs to reach targets and fly in previously inaccessible areas as well as creating a reliable alternative in GPS outages.

Madewell says “I’m thrilled to accept this prestigious honor. I am especially grateful to Dr. Valasek for his encouragement in pursuing this as well as to Dr. Vagners for his advice along the way — without these two mentors I would not be the engineer I am today. As a recipient of the NSF Graduate Research Fellowship, I am excited to utilize its resources while continuing my research alongside Dr. Valasek and the Vehicle Systems & Control Lab team as a graduate student at Texas A&M University.” Congratulations Evelyn! VSCL is thrilled to have you on our team!

VSCL Student Carla Zaramella Graduates with Bachelor of Science

VSCL Undergraduate Carla Zaramella graduated in December 2024 with her Bachelor of Science degree in Aerospace Engineering. Carla will be joining VSCL as a Masters student starting in Spring 2025. She has been an active member of VSCL since January 2022 and has contributed towards the System Identification project and aided with Flight Testing. Carla has interned with Raytheon Technologies over the last two summers, working on land/air radar defense systems.

Dr. John Valasek Reaches Career Milestone

In October Dr. John Valasek reached a career milestone by presenting at his 100th invited seminar/lecture/panelist.

Chronologically:

#1 “Fighter Agility Metrics, Research, and Test,” Lockheed Advanced Development Projects Division (Skunk Works), Burbank, CA, 13 July 1990.

#100 “Multiple-Time-Scale Nonlinear Output Feedback Control of Systems With Model Uncertainties,” Department of Aerospace Engineering, University of Maryland, College Park, MD, 9 October 2024.

Congratulations Dr. Valasek!

Two New Graduate Students Join VSCL in Fall 2024

VSCL is proud to welcome two new graduate research assistants:

Evelyn Madewell joins VSCL as a Ph.D student in the Aerospace Engineering department. She graduated in the Spring of 2024 from the University of Washington with a BS in Aeronautical & Astronautical Engineering and a Minor in Applied Mathematics. As an undergraduate, she was a research assistant and test pilot in the Autonomous Flight Systems Lab. Her research background includes wilderness search and rescue, hazard aware landing optimization, and beyond visual line of sight operations, which she presented at the 2024 AIAA SciTech Forum. Evelyn has interned with Freefly Systems as a flight test engineer, where she programmed a novel testing procedure for the Astro commercial drone platform, and is currently investigating Sequential Triangulation as a way of solving 3D visual navigation in GPS-denied scenarios with Hood Technology. With her interest in flight test engineering and UAV controls, Evelyn’s work with VSCL will begin by contributing to the Real-time System Identification of UAS project.”

Evelyn Madewell joins VSCL as a Ph.D student in the Aerospace Engineering department. She graduated in the Spring of 2024 from the University of Washington with a BS in Aeronautical & Astronautical Engineering and a Minor in Applied Mathematics. As an undergraduate, she was a research assistant and test pilot in the Autonomous Flight Systems Lab. Her research background includes wilderness search and rescue, hazard aware landing optimization, and beyond visual line of sight operations, which she presented at the 2024 AIAA SciTech Forum. Evelyn has interned with Freefly Systems as a flight test engineer, where she programmed a novel testing procedure for the Astro commercial drone platform, and is currently investigating Sequential Triangulation as a way of solving 3D visual navigation in GPS-denied scenarios with Hood Technology. With her interest in flight test engineering and UAV controls, Evelyn’s work with VSCL will begin by contributing to the Real-time System Identification of UAS project.”

Zach Curtis is graduated from Utah Tech with a bachelor’s in mechanical engineering in 2024. Throughout his undergraduate years, he pursued various internships to broaden his practical experience. At RAM Aviation Space & Defense, he interned within the Finite Element Analysis (FEA) group, the prototyping lab, and the controls engineer group, gaining insights into different facets of mechanical engineering. Additionally, he had the opportunity to intern at Baxter Aerospace, a consulting group, where he worked as a mechanical engineer specializing in designing liquid nitrogen baths for application in nuclear fusion research. Furthermore, during his junior year he engaged in research activities at Utah Tech under the guidance of PhD Monty Kennedy, focusing on Shock and Vibration testing. This involved utilizing the NASA-sponsored Shock Sat testing device to contribute to the advancement of knowledge in the field of shock testing on spacecraft.

Zach Curtis is graduated from Utah Tech with a bachelor’s in mechanical engineering in 2024. Throughout his undergraduate years, he pursued various internships to broaden his practical experience. At RAM Aviation Space & Defense, he interned within the Finite Element Analysis (FEA) group, the prototyping lab, and the controls engineer group, gaining insights into different facets of mechanical engineering. Additionally, he had the opportunity to intern at Baxter Aerospace, a consulting group, where he worked as a mechanical engineer specializing in designing liquid nitrogen baths for application in nuclear fusion research. Furthermore, during his junior year he engaged in research activities at Utah Tech under the guidance of PhD Monty Kennedy, focusing on Shock and Vibration testing. This involved utilizing the NASA-sponsored Shock Sat testing device to contribute to the advancement of knowledge in the field of shock testing on spacecraft.

McQuinn Awarded the Stanger Endowed Graduate Fellowship

Cassie-Kay McQuinn was selected as the recipient of the Stanger Endowed Graduate Fellowship. Established by Dianna Stanger, the fellowship provides academic freedom to selected fellows by providing a portion of their support for two years. The goal of the fellowship is to give students the confidence they need to pursue their dreams regardless of the obstacles that are in their way. The selection process for the fellowship focuses on how the student plans to leverage their Ph.D. toward the greater good and on ways in which the student has shown commitment to advancing women’s participation and inclusion in Aerospace Engineering and other STEM fields.

Cassie-Kay was an active member of Club of Females in Engineering (CAFE) throughout her undergraduate studies. This organization highlights the importance of academic excellence, career development, and providing a community for women studying engineering. As a graduate student she has been actively involved in student mentorship through the VSCL and Sigma Gamma Tau (SGT), where she served as the president in 2022. Cassie-Kay wants to leverage her skill set and experience to bring technology and therefore encouragement to people and students who do not have access to resources or the support to pursue a STEM career.

Cassie-Kay McQuinn Graduates with Masters

Cassie-Kay McQuinn graduated with her MS degree in aerospace engineering. Cassie is the 60th graduate student advised to completion of their degree by Dr. Valasek, and the title of her thesis is “Online Near-Real Time Open-Loop System Identification from Closed-Loop Flight Test Data”. This work is sponsored by the National Science Foundation (NSF) Center for Autonomous Air Mobility & Sensing (CAAMS) as one part of the project “Integration of System Theory with Machine Learning Tools for Data Driven System Identification”. Cassie investigated identifying state-space linear dynamic models generated onboard in near-real time, for vehicles with and without an active flight controller.

Cassie is continuing on to the PhD with VSCL, and her dissertation will be based upon work she has been conducting on STARS (Safe Trusted Autonomy for Responsible Spacecraft) during a year-round internship for the Air Force Research Laboratory.

VSCL Presents System Identification Project Update at Center for Autonomous Air Mobility and Sensing (CAAMS) Summer Meeting

In conjunction with Dr. Moble Benedict (AVFL Lab – TAMU), Dr Puneet Singla (CASS Lab – Penn State), and Dr. Randy Beard (MAGICC Lab – BYU), Dr. Valasek presented the current updates of the System Identification project at the National Science Foundation (NSF) CAAMS Summer Industry Advisory Board Meeting.

The project “Integration of System Theory with Machine Learning Tools for Data Driven System Identification” integrates system theory with machine learning tools for data driven system identification. The objective is to derive nonlinear dynamical models by employing a unique handshake between linear time varying subspace methods and sparse approximation tools from high fidelity flight simulations and flight experiments.

The center is a partnership between academia, industry, and government to offer pre-competitive research in autonomous air mobility and sensing. Pictured (left to right) are Undergraduate Researcher Halle Vandersloot, PhD student Cassie-Kay McQuinn, Dr. Valasek, and TAMU AERO alum and VP of Engineering of VectorNav Dr. Jeremy Davis.