Sandia National Laboratory

1 October 2019 – 30 September 2022

Total award $300,000

Reliable, autonomous navigation is a highly desirable capability that is typically viewed through the lens of sensor system development. However, methods for aiding both global and local (i.e., relative to the target) navigation via guidance/mission planning must also be considered: the choice of path can significantly impact the utility of sensor measurements.

This project seeks to demonstrate tightly-integrated navigation and guidance and experimentally verify it by incorporating information about the sensor and sensing environment into the trajectory generation problem. In addition, autonomous team targeting and multi-objective decision making utilizing enhanced target localization are investigated.

The technical objectives of this work are to:

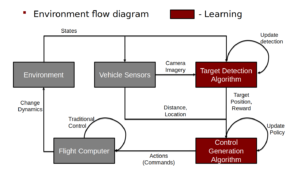

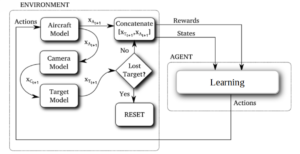

- Investigate the capabilities of single-vehicle Reinforcement Learning (RL) agents, which utilize Deep Deterministic Policy Gradient (DDPG) learning, to task a multi-vehicle platoon.

- Develop a baseline automatic target recognition algorithm to characterize, in simulation and experimentally, the sensing requirements for acquiring the target with fixed-boresight aerial sensing platforms.

- Investigate training an RL agent via simulation to follow a predetermined trajectory to the target sensing field of view, sense and refine the target location, and fly to the target autonomously in a payload-directed flight.

- Demonstrate these capabilities in real-time on vehicle platforms.

- Demonstrate the utility of the VSCL Clark Heterogeneous Multi-Vehicle Modular Control Framework for multi-vehicle tasking communication.

Working with me on this project is:

Graduate Student:

-Hannah Lehman