National Science Foundation: Center for Unmanned Aircraft Systems

Principal Investigator

This project is investigating an online near real-time system identification system for the onboard generation of locally linear models of Small Unmanned Air Systems. Angle-of-attack and sideslip angle are measured rather than estimated, and automated control surface excitation inputs consisting of doublets, triplets, and frequency sweeps are implemented and used to assure consistency in the excitation and to eliminate errors introduced by manually applied user inputs. A real-time vehicle monitoring system is used to provide a human-in-the-loop model update capability, with a goal of ensuring the safety of the vehicle. A combined lateral/directional and longitudinal excitation is developed and demonstrated for identifying a full dynamic system and representing it in state-space form. The methodology is demonstrated with flight tests of a fixed-wing Small Unmanned Air System, with locally linear models generated onboard the vehicle during flight. The objective of this work is to show that the system is capable of reliably and repeatedly generating accurate locally linear models that are suitable for real-time flight control design using model based control techniques and post-flight modal analysis.

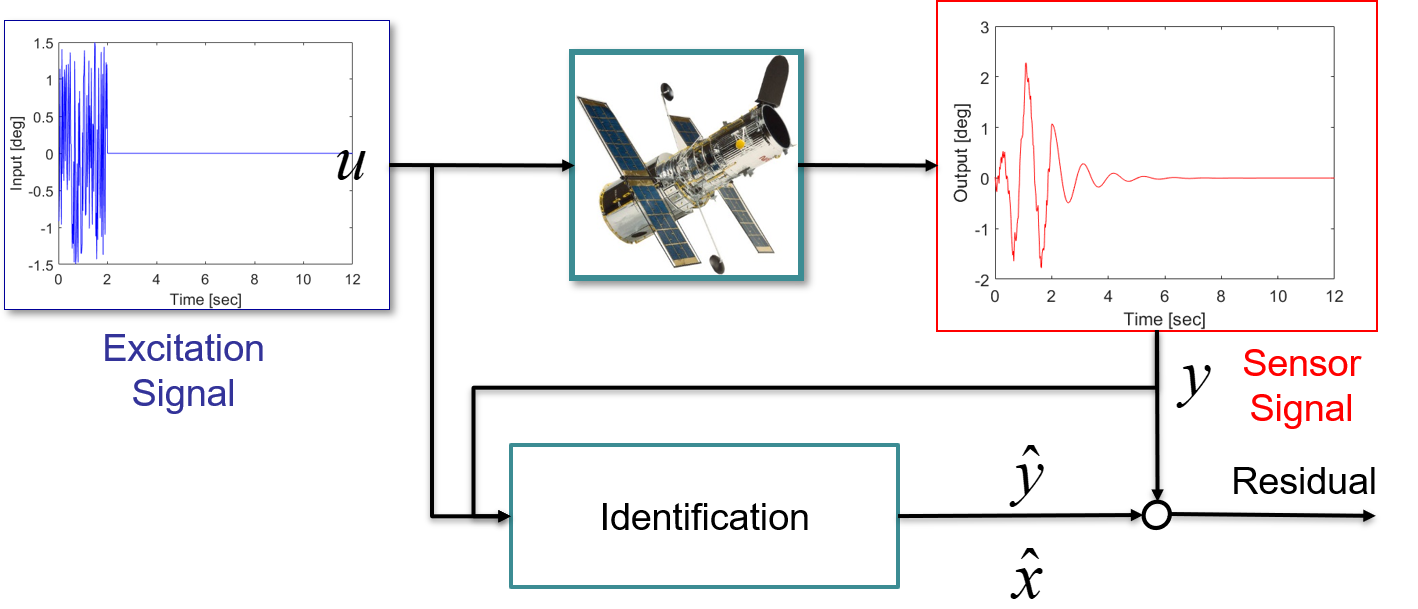

Model Identification:

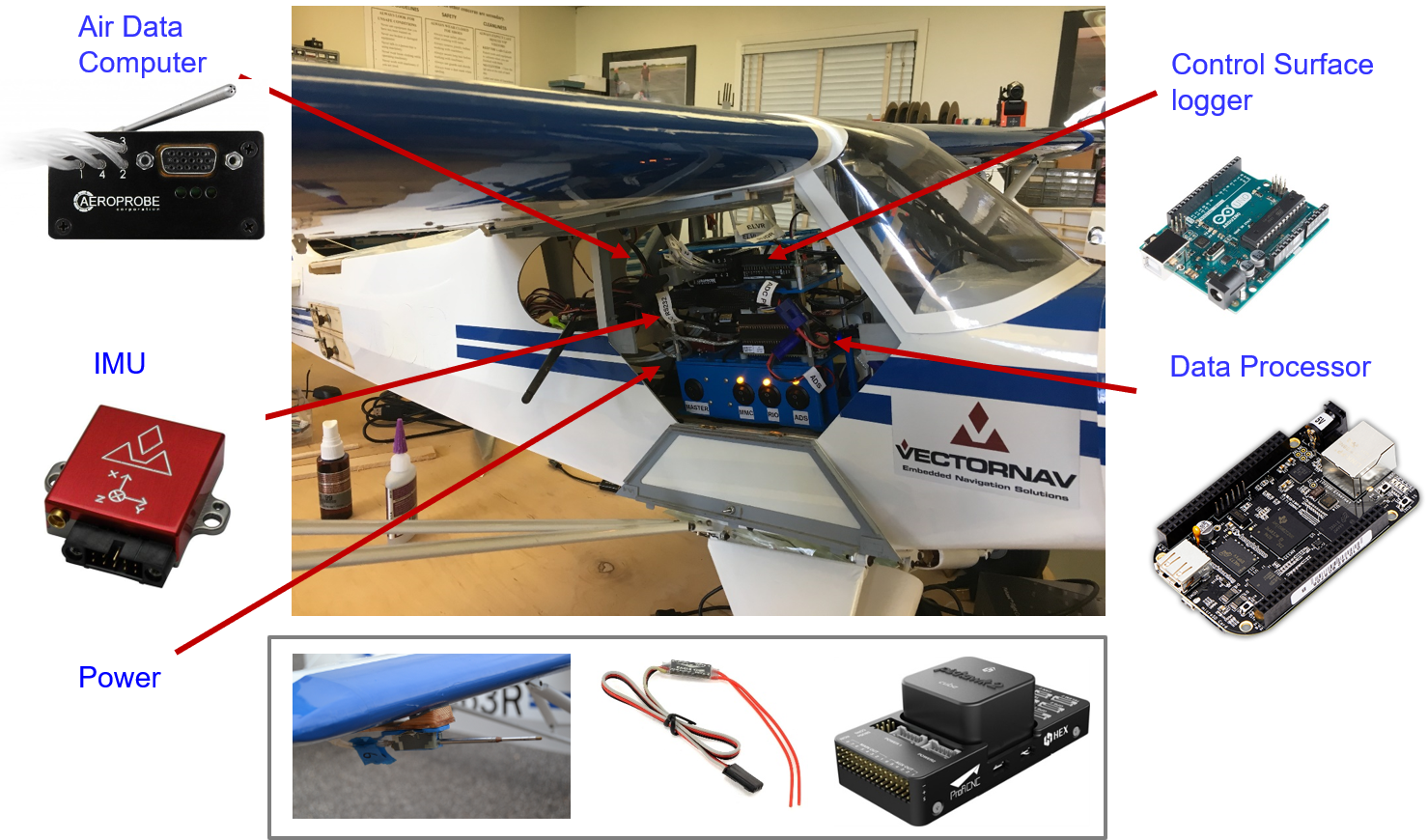

Flight Test Instrumentation:

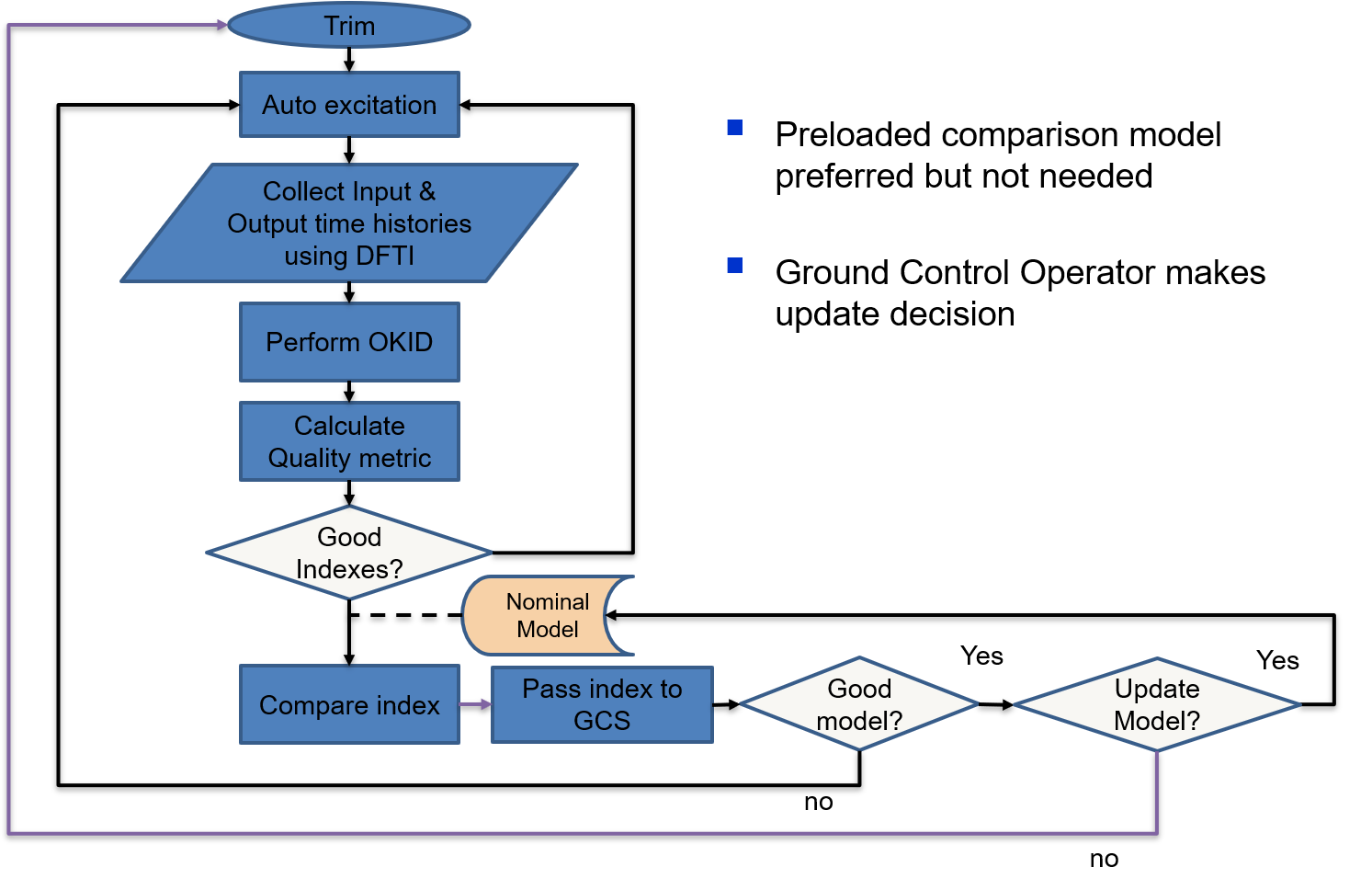

Online Identification Procedure: