Raytheon Company, Intelligence and Information Systems

1 January – 31 December 2013

Co-P.I. Dr. James D. Turner

Total award $250,000

This project consits of applied research that will enable a pathway for basic academic research at Texas A&M University to be transitioned into larger Raytheon Corporate Research and Development efforts for operational systems.

TECHNICAL OBJECTIVES

- Demonstrate the utility of motion based video algorithms developed with the Reinforcement Learning / Approximate Dynamic Programming methodology in Phases I-III.

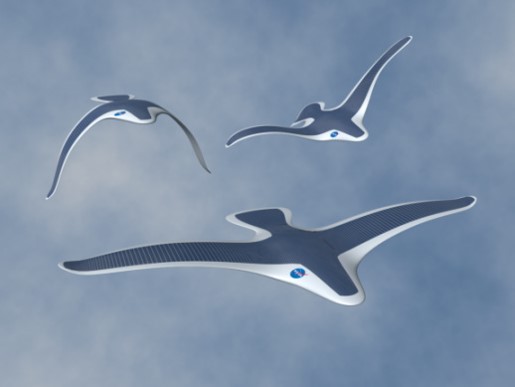

- Develop and demonstrate a reinforcement Learning / Approximate Dynamic Programming methodology for UAS Autonomous Soaring.

- Conceive novel platform positioning algorithms in support of advanced UAS platforms.

- Refine and demonstrate video processing algorithms with the Land, Air, and Space Robotics Laboratory (LASR) at Texas A&M University.

Validation and verification flight testing will be conducted using the three Pegasus research UAS owned and operated by the Vehicle Systems & Control Laboratory.

Working with me on this program are Graduate Research Assistants:

- Anshu Siddarth, Postdoctoral Research Associate

- Kenton Kirkpatrick, Postdoctoral Research Associate

- Dipanjan Saha, Ph.D. student

- Jim Henrickson, M.S. student

- Tim Woodbury, M.S. student

- Josh Harris, B.S. student

- Candace Hernandez, B.S. student

- Alejandro Azocar, B.S. student

![]()